You're Not Building. You're Approving. Here's How to Take It Back.

do this in 2026

You’re generating more code than ever and understanding less than ever.

That’s the trade you made. Maybe you didn’t notice. The output kept coming, the files, functions, features and you kept accepting. Somewhere over the last eighteen months you stopped asking whether you understood what was being built and started asking whether it passed muster.

Some tests pass. Your understanding doesn’t.

I know because I’ve done it too. I’ve shipped weeks of work that should have taken weeks in afternoons. I’ve prompted my way through domains I had no business touching. I’ve felt the rush of watching a feature materialize from a paragraph of intent, marveled at the magic, and moved on to the next thing without pausing to ask what I’d actually learned.

Nothing. I’d learned nothing. I’d generated output.

The vibe coding discourse misses this entirely. One camp celebrates Ralph Wiggum loops as AGI. The other warns about security vulnerabilities and hallucinated dependencies. Both are arguing about the code that is created.

Neither is really truly talking about what happens to You.

Vibe Coding. Collins made it Word of the Year. Stanford says junior developer employment dropped 20%. That METR study found developers thought they were 20% faster with AI but measured 19% slower. Everyone has data. Everyone has takes.

Few have really looked in the mirror.

I. How You Lie to Yourself Now

You open the IDE or Terminal or soon some RTS-game-control center1. You think: Today I’ll be productive.

But productive at what, exactly?

Here’s what you’re actually doing: translating half-formed intentions into prompts, then accepting whatever comes back if it approximately works. You’re not building. You’re approving. You’ve become a Tier 1 quality assurance department for an inference machine that never gets tired but sure can cost a lot.

This felt revolutionary twelve months ago. Now it feels like drowning in choices you don’t fully understand.

The true shift is that you no longer need to know what the code does to ship it. And you’ve quietly accepted this as normal.

It’s a Faustian bargain.

When an agent turns natural language into working software, the craft isn’t writing code. The craft is knowing what to write. Intent is the new source code. Your specification (ya know that once a bureaucratic afterthought you skimmed and forgot) has become the primary artifact of human control.

The sequence of prompts in your sessions and exchanges produce the product. That is the source.

Most people haven’t internalized this. They’re still trying to get better at prompting when they should be getting better at wanting. At specifying and intervening and correcting. At knowing, with crystalline precision, what they’re actually trying to build.

Repeat this and then repeat it again: “Do I know what I want?”

Usually you don’t. Usually you’re hoping the AI will figure it out for you.

It won’t.

II. Context Dies in Transit

Every piece of software you’ve ever cursed at was, at some point, somebody’s clear intention.

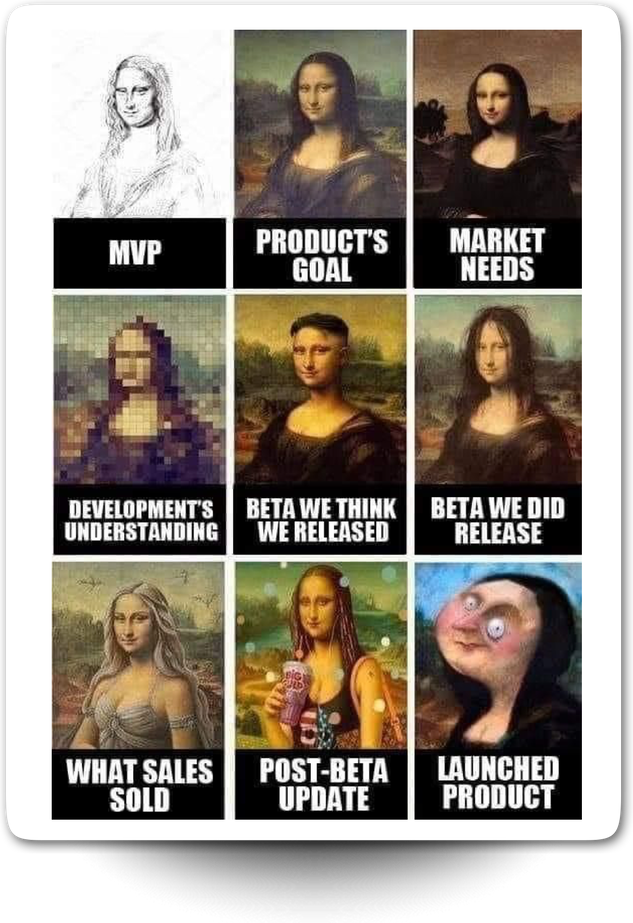

Think about that. The spaghetti code, the inexplicable architecture, the feature that does something almost-but-not-quite useful all of it started as a reasonable idea in someone’s head. Then it got translated. Meeting to notes. Notes to tickets. Tickets to to code to commits. Reviews to revisions.

By the time it shipped, the original intent had been compressed, lossy-encoded, and re-interpreted so many times that the final product bears only ancestral resemblance to the initial vision.

This is software’s original sin. The intent gap. AI doesn’t fix this.

Now the translations happen faster. Which means the degradation happens faster. Which means you ship things that can stray further from what you meant if you considered it deeply. But because everyone proclaims to be shipping at light speed, everyone calls it progress.

The teams winning right now have figured something out that I suspect many of the rest are still missing.

They’re not just versioning code. They’re versioning decisions. Reasoning. The why behind every choice. They treat conversations with agents as “commits”. They make everything implicit become explicit.

This is the new discipline. Call it context engineering for now. Most people don’t know it exists let alone how to operationalize it. They think the job is prompt engineering. But prompt engineering is just typing. Context engineering is architecture. It’s building the information environment that makes the AI’s outputs trustworthy that then become useful.

Without it you’re just generating plausible garbage at scale.

III. Stop Treating Iteration Like Failure

The back-and-forth feels wrong.

You prompt. It misses. You clarify. It misses differently. You rephrase. It gets closer. You adjust. It breaks something else. Four rounds later you have something that works, but the whole experience felt like flailing.

So you conclude: I must be bad at this. If I were better, I’d nail it in one shot.

No. You wouldn’t. Nobody does. The one-shot fantasy is a lie sold by bait videos and Twitter threads optimized for engagement.

Iteration isn’t failure. Iteration IS the method.

This is counterintuitive only if you’ve bought into the myth of the perfect prompt. Slash commands and Skills that try to get it right immediately. Questions iterate toward understanding.

When you treat the conversation with an AI as the cognitive process itself and not some broken version of some better, smoother process thats when “everything changes” to quote the threadbois. The dialogue surfaces assumptions you didn’t know you had. This friction reveals specification gaps that would have shipped as bugs.

The most valuable thing you can do is ask a question the agent doesn’t know how to answer. That’s where your judgment lives. That’s where you’re actually needed.

Slow down inside the iteration. Don’t just rephrase and retry. Ask why it missed. Ask what you failed to specify. Ask what you assumed was obvious that wasn’t.

The AI’s confusion is a mirror of your own unclear thinking. Use it.

IV. The Things That Refuse to Compress

When everything can be generated, what remains valuable?

This is the question lurking beneath the junior developer employment numbers, beneath the job market anxiety, beneath the Reddit threads full of engineers wondering in public if they chose the wrong career.

The answer is: everything that won’t compress.

You can’t compress the pause when a senior engineer says, “This works, but here’s why we don’t do it this way.” That pause contains years of shipped products, failed launches, production outages, and hard-won intuition. It can’t be prompted. It can’t be retrieved from training data. It lives in a human being who went through something.

You can’t compress trust. Or the scar tissue from high stakes incident resolution. Or knowing in your bones when something’s wrong before you can articulate why.

You can’t compress suffering. What saddens me is that every shortcut around struggle is a lesson lost.

The models know everything but they’re brilliant amnesiacs who forget the conversation the moment it ends. They have no continuity of experience, no accumulation of judgment, no sense of what it cost to learn something.

What happens when we become the same? Dependent on machines that compress away not just our work, but our need to think?

I don’t have an answer but I do have a practice.

Calculated sabotage: deliberately choosing difficulty to preserve capacity for difficulty. You don’t have to outsource everything. Some problems are worth solving yourself—not because it’s efficient, but because the solving builds you.

When you solve something yourself, you leave with more than the answer. You leave with the shape of the problem, how it resisted you, how you broke through. That stays. That makes you someone who can solve the next thing.

Choose what to keep. Choose what to struggle with. The choice itself is the craft now.

V. The Hierarchy of Trust

Here’s a practical problem that isn’t well solved: you can’t read everything the AI writes.

Because there’s too much. When an agent produces a thousand lines in response to a prompt you have to skim because truly reading takes time. Generating takes minutes.

So you face a choice: read everything and lose the speed advantage, or trust and risk shipping things you don’t understand.

Most people default to trust. They accept. They merge. They deploy. And mostly it works.

Until it doesn’t.

The history of software is littered with bugs that passed every test and fooled every reviewer only to fail catastrophically in production. When humans write those bugs, we conduct postmortems and update practices. But what do we do now?

The solution isn’t “read everything” or “trust everything.” The solution is building hierarchies of trust.

Some outputs demand full review: security-critical code, financial logic, anything touching user data. You pay the time cost because the risk cost is higher.

Some outputs can be verified by proxy: if the tests pass, if the types check, if the linter’s happy, you trust the machinery you’ve built around the output rather than the output itself.

Some outputs can be trusted provisionally: low-stakes code, isolated functions, things you can rip out easily if they break. Ship fast, monitor closely, fix quickly.

The discipline is knowing which category you’re in before you prompt. The failure mode is treating everything the same: either paranoid review that destroys your speed or blanket trust that destroys your codebase.

Build the hierarchy. Know where you are in it. Adjust based on stakes, not comfort.

Learn to ask of all actions, “Why are they doing that?” Starting with your own. — X. 37

VI. What You Should Actually Do

The cost of trying has collapsed. What once required months can require minutes. What once required syntax expertise requires English.

This asymmetry has consequences. It means the barrier is no longer knowledge. It’s not skill. It’s not credentials. It’s clarity.

Do you know what you want? Can you specify it precisely? Can you recognize it when you see it?

If yes, you can build almost anything. If no, you’ll generate endless variations of the wrong thing.

Here’s my protocol:

Write specifications like you’re writing source code. Version them. Review them. Treat ambiguity as a bug. Every vague adjective is a potential defect. Every assumed bit of context is a future miscommunication. The precision of your intent determines everything downstream.

Build the context before you prompt. Gather the examples, constraints, patterns, and anti-patterns your agent needs. Don’t expect it to read your mind. Build the information environment that makes success probable instead of lucky.

Iteration as thinking. Stop trying to nail it in one shot. The conversation is the cognitive process. Each miss is information about what you failed to specify. Use it.

Preserve shared understanding. Your team’s collective working memory matters more than any individual’s output. Document decisions, not just outcomes. Link prompts to implementations. The context you’re too busy to preserve today becomes the bug you can’t debug tomorrow.

Build trust systems. Establish what gets reviewed and what gets trusted. Make it explicit. Revisit it as stakes change.

Choose difficulty strategically. Some struggles build capacity. Some don’t. Know the difference. Protect your ability to do hard things by occasionally doing hard things.

Start. The question now is “do I know what I want?” If you don’t know, the AI won’t save you. If you do know, the AI you use will multiply you.

VII. The Question To Confront Head On

You’re not supposed to be asking how to create software with AI now. That question is a proxy for a much harder one.

You need to be asking: What kind of builder am I becoming?

The tools will keep improving and capabilities will expand. The discourse will oscillate between ecstasy and doom forever. None of it matters as much as this:

Are you becoming someone who understands what you build? Or someone who ships what you don’t?

Both paths are now available. Both have their own logic. You can generate endlessly, ship constantly, measure productivity by output volume. Or you can generate selectively, understand deeply, measure productivity by clarity of intent.

The first path is faster. The second path is yours.

The industry is sobering up. The wild promises of 2024, somewhat realized in 2025 are meeting the messy realities of 2026. The people who treated AI as a replacement for thinking will drown in code they can’t debug. The people who treat AI as an amplifier for clarity are shipping things they’re proud of.

You get to choose which kind of person you become.

The practice compounds even when the artifacts fade. Every specification you write with precision, every iteration you treat as thinking, every deliberate choice to struggle when you could have delegated leave marks. Not on the codebase. On you.

That’s what lasts. Not the code. You.

The choice is still yours.